What are internal page duplicates and how to deal with them

Author - Constantin Nacul

The urls are different, but the page content is the same. Do you think it's okay? Just a couple of identical pages on the site. But the same content can be filtered by search engines. To prevent this from happening, you need to know how to get rid of internal page duplicates.

The concept of duplicate pages and their types

Doubles - these are separate pages of the site, the content of which completely or partially coincides. In essence, these are copies of the entire page or a specific part of it, available at unique URLs.

What leads to the appearance of duplicates on the site:

1. Automatic generation of duplicate pages by the content management system (CMS) engine of the web resource. For example:

https://site.net/press-centre/cat/view/identifier/novosti/

https://site.net/press-centre/novosti/

2. Mistakes made by webmasters. For example, when the same product is listed in multiple categories and available at different URLs:

https://site.net/category-1/product-1/

https://site.net/category-2/product-1/

3. Changing the structure of the site, when existing pages are assigned new addresses, but their duplicates with old addresses are preserved. For example:

https://site.net/catalog/product

https://site.net/catalog/category/product

There are two types of takes: full and partial.

What are full duplicates

These are pages with identical content, available at unique, unequal addresses. Examples of full takes:

1. URLs of pages with and without slashes ("/", "//", "///") at the end:

https://site.net/catalog///product; https://site.net/catalog//////product.

2. HTTP and HTTPS pages: https//site.net; http://site.net.

3. Addresses with "www" and without "www": http//www.site.net; http://site.net.

4. URLs of pages with index.php, index.html, index.htm, default.asp, default.aspx, home:

https://site.net/index.html;

https://site.net/index.php;

https://site.net/home.

5. Page URLs in upper and lower case:

https://site.net/example/;

https://site.net/EXAMPLE/;

https://site.net/Example/.

6. Changes in the hierarchical structure of the URL. For example, if a product is available at several different URLs:

https://site.net/catalog/dir/tovar;

https://site.net/catalog/tovar;

https://site.net/tovar;

https://site.net/dir/tovar.

7. Additional parameters and labels in the URL.

- URL with GET parameters: https://site.net/index.php?example=10&product=25. The page is fully consistent with the following: https://site.net/index.php?example=25&cat=10.

- The presence of utm-tags and gclid parameters. Utm tags help provide information to the analytics system for analyzing and tracking various traffic parameters. The URL of the landing page to which utm tags are added looks like this: https://www.site.net/?utm_source=adsite&utm_campaign=adcampaign&utm_term=adkeyword

- gclid (Google Click Identifier) options. Destination URL tag that is automatically added to track company, channel, and keyword data in Google Analytics. For example, if your site ad is clicked on https://site.net, then the visitor's redirect address will look like this: https://site.net/?gclid=123xyz.

- yclid label. Helps to track the effectiveness of advertising campaigns in Yandex Metrica. The tag allows you to track the actions of a visitor who went to the site through an advertisement. Here's what the jump address looks like: https://site.net/?yclid=321.

- openstat label. Universal and is also used to analyze the effectiveness of advertising campaigns, analyze site traffic and user behavior on the site. Link labeled "openstat": https://site.net/?_openstat=231645789.

- Doubles that are created by a referral link. A referral link is a special link with your identifier, by which sites recognize who a new visitor came from. For example: https://site.net/register/?refid=398992; https://site.net/index.php?cf=reg-newr&ref=Uncertainty.

8. The first page of the pagination of the product catalog of an online store or bulletin board, blog. It often corresponds to a category page or general section pageall: https://site.net/catalog; https://site.net/catalog/page1.

9. Incorrect 404 error settings lead to multiple duplicates. For example: https://site.net/rococro-23489-rocoroc; https://site.net/8888-???.

Bold text can contain any characters and/or numbers. Pages of this kind should return a 404 (not 200) server response code or redirect to the actual page.

What are partial takes

In partially duplicated pages, the content is the same, but there are slight differences in the elements.

Types of partial takes:

1. Duplicates on product cards and category (catalog) pages. Here, duplicates arise due to product descriptions that are presented on the general product page in the catalog. And the same descriptions are presented on the pages of product cards. For example, in the catalog on the category page, under each product there is a description of this product:

And the same text on the product page:

To avoid duplication, do not show full information about products on the category (catalog) page. Or use a non-repeating description.

2. Duplicates on the pages of filters, sorting, search and pagination pages, where there is similar content and only the placement order changes. However, the description text and headings do not change.

3. Duplicates on pages for printing or for downloading, the data of which fully correspond to the main pages. For example:

https://site.net/novosti/novost1

https://site.net/novosti/novost1/print

Partial duplicates are harder to spot. But the consequences of them appear systematically and negatively affect the ranking of the site.

What causes duplicate pages on the site

Duplicates can appear regardless of the age and number of pages on the site. They will not prevent the visitor from getting the necessary information. The situation is quite different with search engine robots. Because the URLs are different, search engines perceive such pages as different.

The consequence of a large amount of duplicate content is:

- Indexing issues. When generating duplicate pages, the overall size of the site increases. Bots, indexing "extra" pages, inefficiently spend the crawling budget of the owner of the web resource.

- "Necessary" pages may not get into the index at all. Let me remind you that the crawl budget is the number of pages that the bot can crawl in one visit to the site.

- Changes to the relevant page in the SERP. The search engine algorithm may decide that the duplicate is more appropriate for the query. Therefore, in the results of the issuance, he will show the wrong page, the promotion of which was planned. Another result: due to competition between duplicate pages, none of them will get into the SERP.

- Loss of link weight of pages that are promoted. Visitors will link to duplicates, not original pages. The result is a loss of natural link mass.

Catalog of tools for finding duplicate pages

So, we have already found out what doubles are, what they are and what they lead to. Now let's move on to how to find them. Here are some effective ways:

Search for duplicates using special programs

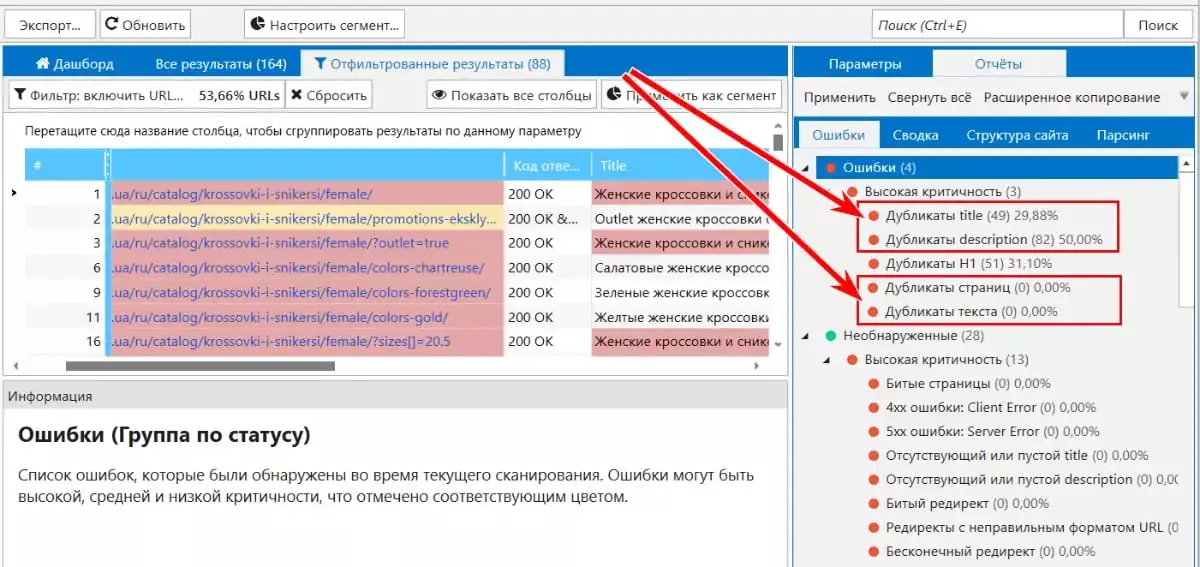

Netpeak Spider. Using scanning, you can detect pages with duplicate content: full duplicate pages, duplicate pages by block content <body>, repeated "Title" tags, and "Description" meta tags.

Using Search Operators

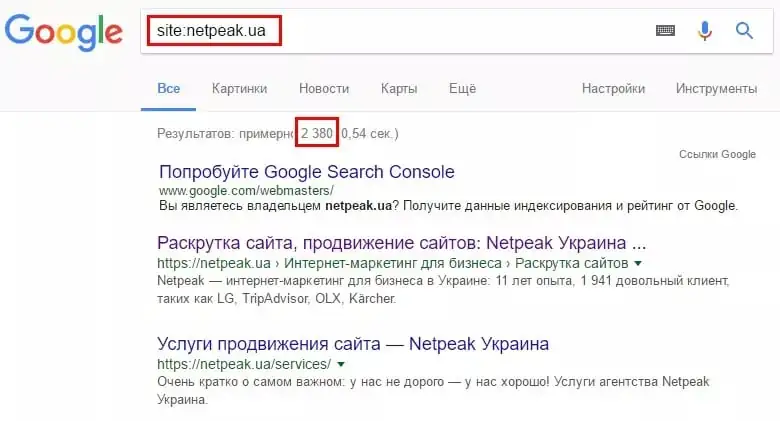

To search for duplicates, you can analyze pages that are already indexed using the "site:" search operator. To do this, in the search bar, for example Google, enter the query "site:examplesite.net". It will show the pages of the site in the general index. This way we will see the number of pages in the SERP, if it is very different from the number of pages found by the spider or pages in the XML map.

After reviewing the results, you will find duplicate pages, as well as "junk" pages that need to be removed from the index.

You can also use the search to analyze the search results for a specific piece of text from pages that, in your opinion, may have duplicates. To do this, we take a part of the text in quotation marks, after it we put a space, the “site:” operator and enter it into the search bar. You must specify your site to find pages that contain this particular text. For example:

"Snippet of text from a site page that may have duplicates" site:examplesite.net

If there is only one page in the search results, then the page has no duplicates. If there are several pages in the search results, it is necessary to analyze them and determine the reasons for duplicating the text. Perhaps these are duplicates that need to be eliminated.

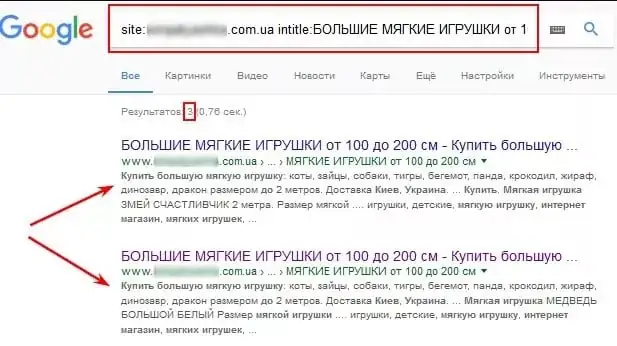

Similarly, using the "intitle:" operator, we analyze the content of "Title" on the pages in the issue. Duplicate "Title" is a sign of duplicate pages. To check, use the search operator "site:". In this case, we enter a query of the form:

site:examplesite.net intitle: Full or partial text of the Title tag.

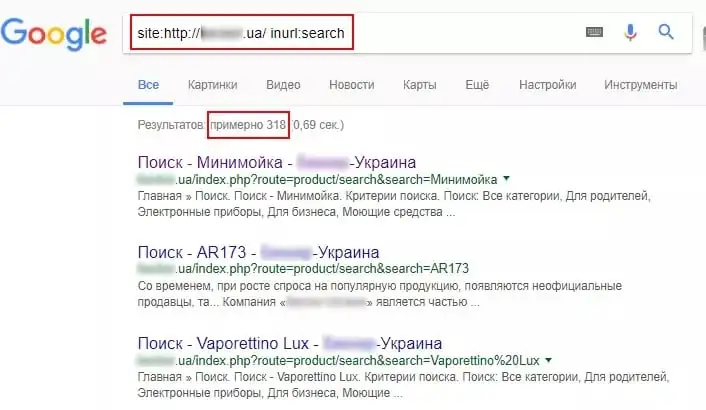

Using the "site" and "inurl" operators, it is possible to determine page duplicates that occurred on the sorting pages (sort) or on the filter and search pages (filter, search).

For example, to search for sorting pages in the search bar, you need to write: site:examplesite.net inurl:sort.

To search the filter and search pages: site:examplesite.net inurl:filter, search.

Remember, search operators only show duplicates that have already been indexed. Therefore, you cannot fully rely on this method.

How to get rid of duplicates

We have already considered what duplicates are, types, consequences of duplicates and how to find them. Now let's move on to the most interesting thing - how to make them stop harming optimization. We use methods to eliminate duplicate pages:

301 redirect

It is considered the main method of eliminating full duplicates. A 301 redirect automatically redirects from one page of a website to another. According to the configured redirect, the bots see that the page is no longer available at this URL and has been moved to another address.

A 301 redirect allows you to pass link juice from a duplicate page to the main page.

This method is relevant for eliminating duplicates that appear due to:

- URL in different registers;

- URL hierarchies;

- definitions of the main site mirror;

- problems with slashes in URLs.

For example, 301 redirects are used to redirect from pages:

- https://site.net/catalog///product;

- https://site.net/catalog//////product;

- https://site.net/product to https://site.net/catalog/product.

Robots.txt file

Using the method, we recommend to search bots which pages or files should not be crawled.

To do this, you must use the "Disallow" directive, which prohibits search bots from accessing unnecessary pages.

User-agent: *

Disallow: /page

Note that if the page is listed in robots.txt with the Disallow directive, this page may still appear in the search results. Why? It has been indexed before, or it has internal or external links. Robots.txt instructions are advisory for search bots. They cannot guarantee the removal of duplicates.

Meta tag and

Meta tag tells the robot not to index the document and not follow links. Unlike robots.txt, this meta tag is a direct command and will not be ignored by crawlers.

Meta tag instructs the robot not to index the document, but at the same time follow the links placed in it.

But, according to Google spokesman John Mueller, sooner or later the "noindex, follow" meta tag is perceived by the search engine as "noindex, nofollow".

That is, if the bot visits for the first time and sees the “noindex, follow” directive, then it does not index the page, but the probability of clicking on internal links still remains. But if the bot returns after a while and sees “noindex, follow” again, then the page is completely removed from the index, the bot stops visiting it and taking into account the links placed on this page. This means that in the long run there is no difference between "noindex, follow" and "noindex, nofollow" meta tags.

To use the method, you must place on duplicate pages in a block one of the meta tags:

or similar: ; .

rel="canonical" attribute

Use the method when the page cannot be deleted and must be left open for viewing.

Tag for eliminating duplicates on pages of filters and sorting, pages with get-parameters and utm-tags. Used for printing, when using the same information content in different language versions and on different domains. The rel="canonical" attribute for different domains is not supported by all search engines. For Google, it will be clear, Yandex will ignore it.

By specifying a canonical link, we indicate the address of the page that is preferred for indexing. For example, the site has a category "Laptops". It contains filters that show different selection options. Namely: brand, color, screen resolution, case material, etc. If these filter pages will not be promoted, then we specify the general category page for them as canonical.

How to set the canonical page? Place the rel="canonical" attribute between the tags in the HTML code of the current page … .

For example, for pages:

- https://site.net/index.php?example=10&product=25;

- https://site.net/example?filtr1=%5b%25D0%,filtr2=%5b%25D0%259F%;

- https://site.net/example/print.

The page will be canonical https://site.net/example.

In HTML code it will look like this: .

Сonclusions

1. Duplicates are separate pages of the site, the content of which completely or partially coincides.

2. The reasons for the appearance of duplicates on the site: automatic generation, mistakes made by webmasters, changes in the structure of the site.

3. What do duplicates on the site lead to: indexing gets worse; changes in the relevant page in the search results; loss of natural link mass by promoted pages.

4. Duplicates search methods: use of parser programs (Netpeak Spider); search operator site.

5. Duplication removal tools: corresponding commands in the robots.txt file; meta name tag="robots" content="noindex, nofollow"; rel="canonical" tag; 301 redirect.

Eliminated duplicate content? Now you need to check the site again. So you will see the effectiveness of the actions taken, evaluate the effectiveness of the chosen method. We recommend that you analyze the site for duplicates regularly. This is the only way to identify and eliminate errors in time.

A source: Netpeak